Profile-Guided Optimization (PGO) automatically boosts application performance by using runtime profiling data to guide compiler optimizations. By focusing and tailoring the compiler’s optimizations to hot code paths, PGO can reduce both end-user latency and cost of capacity by 10-30%. It can do this without requiring the developer to change source code or even look at profiles.

PGO gets most of its performance gains by improving a processor’s instruction fetch efficiency. On modern data-center workloads, processors spend a lot of time stalled on instruction cache misses, TLB misses, and branch mispredictions (typically called front-end stalls) [Google]. Modern software with all its layers of abstraction, dependencies, error handling, dynamic dispatch, and its sheer binary sizes, make it difficult for processors to fetch instructions efficiently. The following chart shows that 20-45% of the processor cycles is wasted to front-end stalls on a real Go service profiled in production:

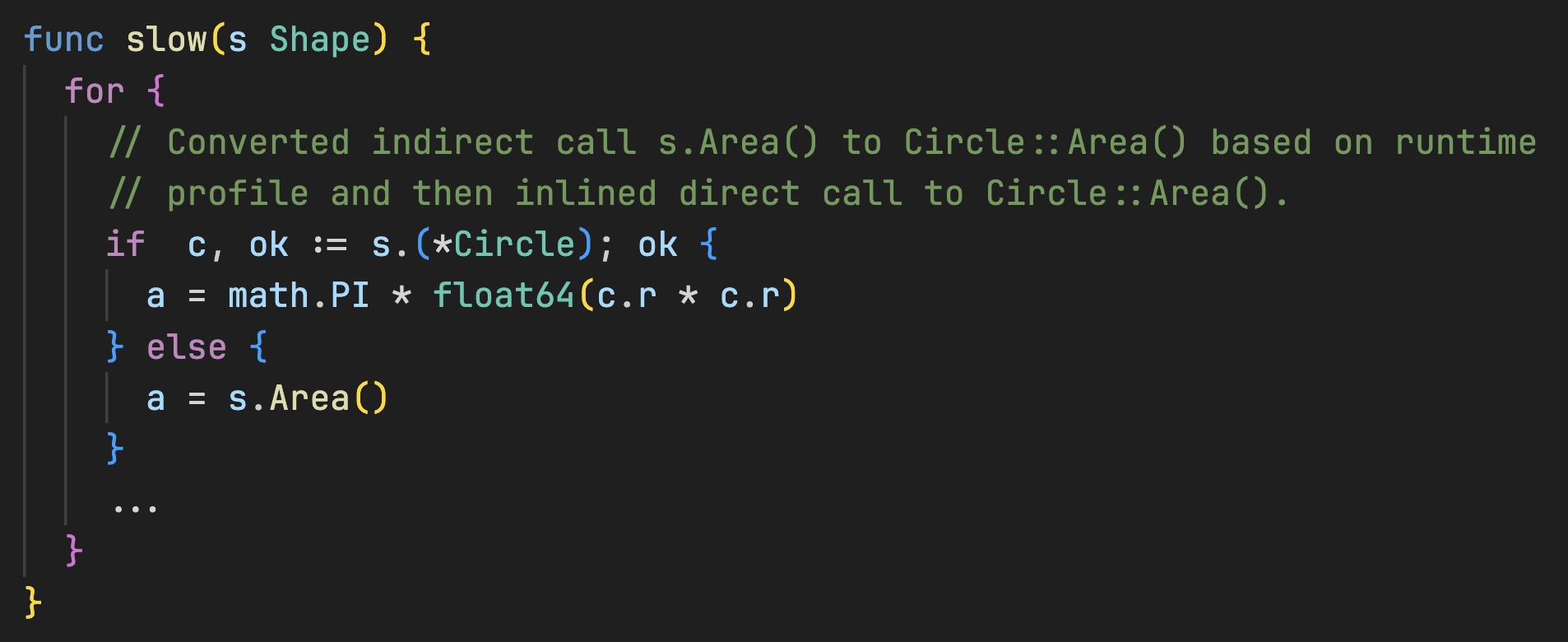

PGO improves fetch efficiency by separating frequently-executed (hot) code paths from the cold code, and optimizing the overheads of these hot paths. The following example in Go demonstrates these optimizations: The Shape interface defines an Area method and has two implementations: Square and Circle. The slow function invokes the Area method of a Shape instance in a for-loop, a virtual method dispatch that incurs runtime cost.

The following code snippet shows the result of profile-guided optimizations in the Go compiler (starting with v1.21+). Assuming that profiling reveals that the Shape instance is most often a Circle, optimizations tailor the code to the Circle type eliminating the overhead of indirect method dispatch. This also enabled optimizations to then inline the Area method, eliminating function call overhead altogether and exposing the inlined code to other optimizations such as loop transformations in the surrounding code. Future PGO optimizations such as basic-block reordering can move the else-block out of the hot-path.

To further showcase the advantages of PGO, we selected a well-known go-json benchmark from GitHub with 2.7K stars. The "NumberIsValidRegexp" benchmark was executed 10 times on a MacBook Pro M2 Max with 64GB RAM, resulting in a notable 7.5% performance enhancement with PGO. The comparison below highlights the top-20 functions inlined before and after applying PGO, revealing significant inlining of hot functions from the regexp package, including step, OnePassNext, and match, among others.

Top-20 expensive functions before applying PGO

Top 20 expensive functions after applying PGO inlining

PGO offers compelling performance benefits without requiring any code changes by the developer. Recently, we also applied PGO to the Go version of the 1-billion row challenge and demonstrated a 3.6% performance boost in this I/O intensive benchmark.

Deploying PGO to production and automating it in a modern cloud and/or on-prem environment is challenging and beyond just toggling a compiler switch. Only a few large tech companies have successfully deployed PGO into their production workflows, including Google [Google-AutoFDO], Meta [Meta-Bolt], and Uber.

In our next blog, we will show how to automate PGO in a production setting. If you are keen on reducing your cloud or on-prem data center costs using PGO, we'd love to hear from you. Connect with us to explore how we can help enhance your systems' efficiency and reduce costs.

We invite you to join our Slack community, where we continue to explore and discuss these topics further.